Introduction

We have seen how probability plays a key role in machine learning. In this chapter, we are going to discuss further how probability theory can be used to make predictions. Data is generated from a process that is not completely known. Since we do not have complete knowledge about the process, probability theory is used to model the uncertainty within the process. In this chapter, we will discuss how probability theory can be used to represent uncertainty and make prediction.

Probability Theory

A random process by which we observe something uncertain is called a random experiment. A random experiment is any situation where the process can lead to more than one possible outcome. Consider a random process of tossing a coin which may result in two mutually exclusive outcomes, either head or tail turns up. The outcomes of this process cannot be predicted with certainty. We can only say how likely each outcome is. The chance of a particular event happening is called probability. Another important concept that is associated with probability is the random variable. A random variable is a numerical description of the outcome of a random experiment.

Consider the process of tossing a coin with two mutually exclusive outcomes, which are either head or tail. We define a random variable ![]() that takes one of the two values. Let

that takes one of the two values. Let ![]() be the value for heads and

be the value for heads and ![]() denotes the outcome of tails. The probability of an event

denotes the outcome of tails. The probability of an event ![]() is denoted by

is denoted by ![]() or

or ![]() and its value satisfies

and its value satisfies ![]() and

and ![]() . A higher probability value indicates a higher chance of an event occurring. Thus, using probability value, we can predict the outcome of the next toss. For example, the prediction will be head if

. A higher probability value indicates a higher chance of an event occurring. Thus, using probability value, we can predict the outcome of the next toss. For example, the prediction will be head if ![]() and tails otherwise.

and tails otherwise.

If ![]() is not known, we can estimate the probability by repeatedly tossing the coin and observing the outcomes. Given a sample of outcomes of past

is not known, we can estimate the probability by repeatedly tossing the coin and observing the outcomes. Given a sample of outcomes of past ![]() tosses, the estimated probability of heads is

tosses, the estimated probability of heads is

![]()

where ![]() is the number of tosses with outcome heads. For example, if the number of tosses is 10 and the sample

is the number of tosses with outcome heads. For example, if the number of tosses is 10 and the sample ![]() , the estimated probability is

, the estimated probability is

![]()

Given two independent events, ![]() and

and ![]() , such as getting heads from tossing two coins. Note that independent events means each event is not affected by other events. We can calculate the probability of the two events happening as follows:

, such as getting heads from tossing two coins. Note that independent events means each event is not affected by other events. We can calculate the probability of the two events happening as follows:

![]()

Some events are dependent on other events. Consider taking two balls from a jar containing 5 red and 5 blue balls. Initially, both red and blue balls have equal chance of being taken. If the first ball was red, the second ball is less likely to be red as there are 4 red balls left in the jar. The probability that the second ball is chosen is called conditional probability. Let ![]() and

and ![]() be the event of selecting the second and first balls respectively. The conditional probability of event

be the event of selecting the second and first balls respectively. The conditional probability of event ![]() given that event

given that event ![]() is given as follows:

is given as follows:

![]()

The conditional probability can be rewritten as follows:

![]()

where ![]() is the conditional probability of event

is the conditional probability of event ![]() given

given ![]() , also known as the likelihood and

, also known as the likelihood and ![]() is the conditional probability of event

is the conditional probability of event ![]() and

and ![]() , also known as the posterior probability.

, also known as the posterior probability. ![]() and

and ![]() are the probabilities of observing

are the probabilities of observing ![]() and

and ![]() respectively which can be interpreted as the prior probability and evidence. Using the law of total probability,

respectively which can be interpreted as the prior probability and evidence. Using the law of total probability, ![]() . The above definition is known as Bayes’ rule [1].

. The above definition is known as Bayes’ rule [1].

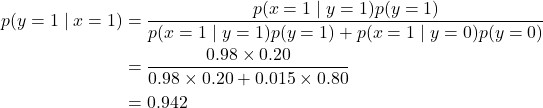

The following example illustrates the use of Bayes’ rule in medical testing. Suppose that for every 100 women, 25 are indeed pregnant.

![]()

The sensitivity of the pregnancy test is 98% which means if a woman is pregnant, the test will be positive with a probability of 0.98.

![]()

Additionally, the false positive rate of the test kit is 1.5%.

![]()

Suppose a woman takes a pregnancy test and the outcome of the test is positive. What is the probability the woman is pregnant?

Since the probability is 0.942, we can conclude that the woman is pregnant.

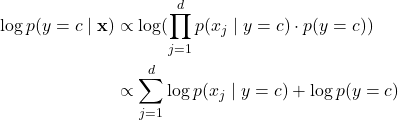

Naive Bayes

We have seen how we can perform classification when uncertainty is modeled using probabilities i.e. the likelihood, prior and evidence. But, in many cases, this information is not available to us. In the absence of the information, we can estimate the probabilities from past observations (training data). Then, using the estimated probabilities, we can classify the feature vector ![]() by applying them to the Bayes’ rule as follows:

by applying them to the Bayes’ rule as follows:

![]()

The key to using this approach is to estimate the class-conditional probabilities of the features or the likelihood. Let ![]() be the number of features, the likelihood is

be the number of features, the likelihood is

![]()

However, to calculate the likelihood is cumbersome especially when the number of features is large. Naive Bayes is a simple classifier that is based on applying the Bayes’ rule with a strong independence assumption between the features [2]. Due to this assumption, the calculation of likelihood is simplified to

![]()

Thus, the posterior probability becomes

![]()

Since ![]() is a constant, and the prediction is made by comparing the posterior probabilities and choose the class with the largest probability. Thus, the denominator can be omitted and the classification rule becomes

is a constant, and the prediction is made by comparing the posterior probabilities and choose the class with the largest probability. Thus, the denominator can be omitted and the classification rule becomes

![]()

The prior probability ![]() can be estimated by assuming equiprobable classes or by calculating the number of instances in the dataset which belong to class

can be estimated by assuming equiprobable classes or by calculating the number of instances in the dataset which belong to class ![]() . The estimation of the conditional probability or feature’s distribution

. The estimation of the conditional probability or feature’s distribution ![]() is done by assuming the distribution of the features [3]. The assumption is done based on the type of the feature which is described in the following sections.

is done by assuming the distribution of the features [3]. The assumption is done based on the type of the feature which is described in the following sections.

Categorical Naive Bayes

First, we discuss the case where the features are categorical data. Assuming there is a set of features and each feature ![]() has its own categorical distribution. For each feature

has its own categorical distribution. For each feature ![]() in the training set, the categorical distribution is estimated conditioned on class

in the training set, the categorical distribution is estimated conditioned on class ![]() . The conditional probability of label

. The conditional probability of label ![]() in feature

in feature ![]() given class

given class ![]() is estimated as

is estimated as

![]()

where ![]() is the number of times label

is the number of times label ![]() appears in feature

appears in feature ![]() which belongs to class

which belongs to class ![]() and

and ![]() is the number of instances with class

is the number of instances with class ![]() .

.

Bernoulli Naive Bayes

Bernoulli distribution is a discrete distribution with only two possible values for the random variable. Bernoulli naive Bayes is typically used for document or text classification in which the features are the binary word occurrence ![]() , where

, where ![]() is a boolean feature expressing the presence (occurrence) or absence of the

is a boolean feature expressing the presence (occurrence) or absence of the ![]() -th word in the document. If the probability of a word’s occurrence is

-th word in the document. If the probability of a word’s occurrence is ![]() , then the probability of absence is

, then the probability of absence is ![]() . Thus, the likelihood of a document

. Thus, the likelihood of a document ![]() given class

given class ![]() is given by

is given by

![]()

which is the product of the conditional probability of each word’s occurrence ![]() given class

given class ![]() .

. ![]() is the probability of

is the probability of ![]() -th word occurring in class

-th word occurring in class ![]() . This probability can be estimated by counting the number of times

. This probability can be estimated by counting the number of times ![]() -th word occurs in the documents labeled with class

-th word occurs in the documents labeled with class ![]()

Multinomial Naive Bayes

Multinomial distribution is a generalized form of binomial distribution, a discrete distribution of a random variable with more than two outcomes. Using multinomial distribution, in the case of document classification, rather than word occurrence vectors, we can use the frequency of a word as a feature. The likelihood of a document given class ![]() is given by

is given by

![]()

and ![]() can be estimated as follows:

can be estimated as follows:

![]()

where ![]() is the number of times word

is the number of times word ![]() appears in the document with class

appears in the document with class ![]()

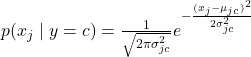

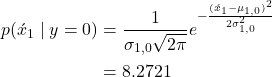

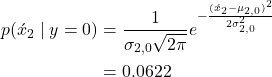

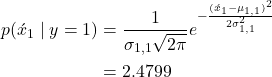

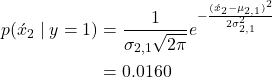

Gaussian Naive Bayes

In the case of real-valued features, we typically assume the features are distributed according to the Gaussian distribution. The likelihood of feature ![]() given class

given class ![]() is given by

is given by

where ![]() is the mean of feature x_j with class

is the mean of feature x_j with class ![]() and

and ![]() is the variance of feature x_j with class

is the variance of feature x_j with class ![]() .

.

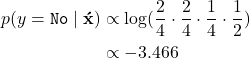

Log Trick

Multiplying the conditional probabilities may cause computational instability or known as numerical underflow. This is because the value of ![]() is often very small especially if

is often very small especially if ![]() is a high dimensional vector. Thus, to avoid the numerical underflow, we take logarithm of the conditional probabilities when applying Bayes rule. Note that multiplication operation becomes an addition in the logarithm space.

is a high dimensional vector. Thus, to avoid the numerical underflow, we take logarithm of the conditional probabilities when applying Bayes rule. Note that multiplication operation becomes an addition in the logarithm space.

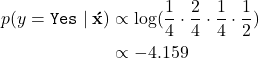

Numerical Example 1

Given the following dataset.

| Outlook | Temperature | Humidity | Play Tennis |

| Sunny | Hot | High | No |

| Cloudy | Hot | High | Yes |

| Rain | Mild | High | Yes |

| Rain | Cool | Normal | No |

| Cloudy | Mild | High | Yes |

| Sunny | Cool | Normal | Yes |

| Rain | Mild | High | No |

| Sunny | Mild | High | No |

![]()

Prior probability

![]() ,

, ![]()

Conditional probability for ![]()

![]() ,

, ![]()

![]() ,

, ![]()

![]() ,

, ![]()

Conditional probability for ![]()

![]() ,

, ![]()

![]() ,

, ![]()

![]() ,

, ![]()

Conditional probability for ![]()

![]() ,

, ![]()

![]() ,

, ![]()

Given ![]() , predict the class of

, predict the class of ![]() .

.

Posterior probability

Since ![]() , the prediction is No.

, the prediction is No.

Numerical Example 2

Given the following dataset where 0 is female and 1 is male.

| Height (m) | Weight (kg) | Gender |

| 1.72 | 68 | 1 |

| 1.83 | 79 | 1 |

| 1.71 | 65 | 0 |

| 1.95 | 83 | 1 |

| 1.68 | 60 | 0 |

| 1.81 | 76 | 1 |

| 1.75 | 69 | 0 |

| 1.67 | 57 | 0 |

![]()

Prior probability

![]() ,

, ![]()

![]() and

and ![]() for

for ![]()

![]() ,

, ![]()

![]() ,

, ![]()

![]() and

and ![]() for

for ![]()

![]() ,

, ![]()

![]() ,

, ![]()

Given ![]() , predict the gender of

, predict the gender of ![]() .

.

Probability density function of ![]() given class

given class ![]() .

.

Posterior probability of class ![]() .

.

![]()

Probability density function of ![]() given class

given class ![]() .

.

Posterior probability of class ![]() .

.

![]()

Since ![]() , the prediction is class

, the prediction is class ![]() (female).

(female).

Naive Bayes is a Linear Classifier

In many cases, naive Bayes leads to a linear decision boundary. Given an input ![]() , the prediction is 1 if

, the prediction is 1 if

![]()

Taking a log of both sides and using Bayes rule, we have

![]()

Suppose the features are Gaussian, ![]()

\noindent where ![]() and

and ![]() are the mean and variance of the distribution. Note that

are the mean and variance of the distribution. Note that ![]() is diagonal and symmetric.

is diagonal and symmetric.

![]()

Expanding the expression, we have

![]()

Assuming both classes share the same covariance matrix, ![]() , the quadratic terms are canceled. We have

, the quadratic terms are canceled. We have

![]()

![]() can be considered as a constant

can be considered as a constant ![]() . Thus, we have

. Thus, we have

![]()

which is a linear form in ![]() .

.

References

[1] P. Davies, “Kendall’s Advanced Theory of Statistics. Volume 1. Distribution Theory.” Wiley Online Library, 1988.

[2] D. J. Hand and K. Yu, “Idiot’s Bayes—not so stupid after all?,” International statistical review, vol. 69, no. 3, pp. 385–398, 2001.

[3] G. H. John and P. Langley, “Estimating continuous distributions in Bayesian classifiers,” arXiv preprint arXiv:1302.4964, 2013.