Aging and dependent population is recognized as a major social and economic issues for the coming decades. According to World Health Organization, it is estimated that there will be 2 billion people of age 60 and older by 2050 [1]. Physical activity plays a major role in healthy ageing and one of the requirement to maintain health functioning. It is also shown that physical activity reduces the risk of having chronic diseases such as cardiovascular disease, diabetes and osteoporosis [2]. Wearable sensors, either dedicated sensors attached to human body directly or indirectly i.e. sensors embedded into clothes and wristwatches or those available on the portable devices like mobile phones, can act as the interface to remotely measure humans body motion and vital signs [3]. The sensor data can be interpreted to recognize the user’s activities and physiological states.

Activity recognition is process of discovering and activity pattern and recognizing the activity from a series of sensor data. It can be used to analyze the daily activities and behaviour of a person to evaluate his or her general health. For instance, difficulties in performing transitional activities such as rising from a chair and sitting down can limit independence and lead to a less active lifestyle and a subsequent deterioration in health [4]. Falls cause two thirds of fatal death in elderly people aged 65 years or old, and they are the most common type of accidents among the elders [5]. Most falls occurred during activities such from standing to sitting and vice versa, and when initiating walking [6]. Transition duration of the activities and number of successful attempts are associated with falls or fall risks [7]. Falls among Parkinson’s disease patients are commonly due to freezing of gait when initiating walking or turning [8].

In this article, I am presenting the experimental results of classifying physical activity data using Convolutional Neural Network (CNN). The data was collected from fifteen (15) elderly peoples performing a series of physical activities at their home. The inertial sensor (Thunderboard Sense manufactured by Silicon Labs) was attached at the chest, waist and ankle. Only data from the sensor on the waist is used in the experiments.

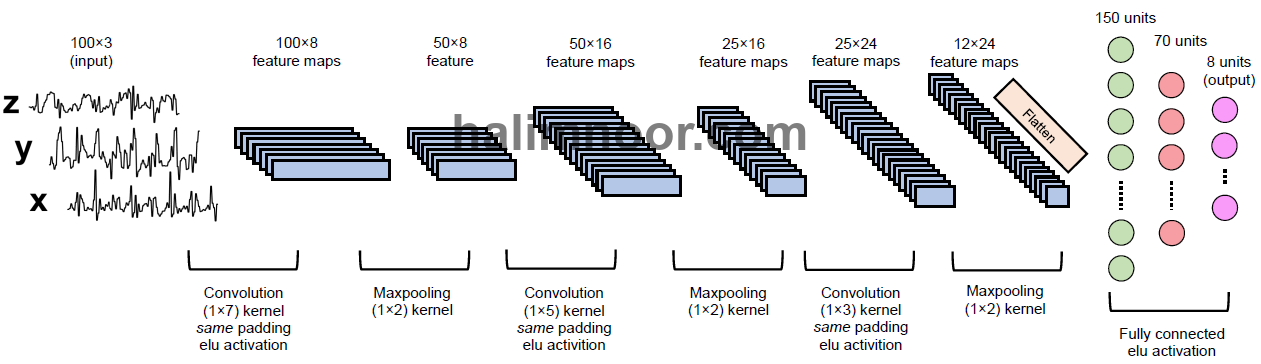

CNN is a deep artificial neural network that comprises convolutional layers, often with subsampling (or known as pooling) layers and then followed by fully connected layers. The convolutional layers act as the feature extractor by performing convolution over the sensor data. It is computationally expensive to use all the extracted features (feature maps) for classification. To reduce the dimensionality of the features, pooling layers are used to downsample the feature maps of the convolutional layers. The fully connected layers are identical to the layers in an artificial neural network. A CNN architecture has been implemented in the experiments as shown in Fig. 2.

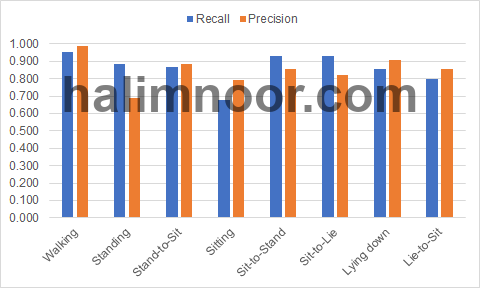

The input is the acceleration signals with three channels. The signals are fed to three convolutional and maxpooling layers to extract the underlying features from the signals. The feature maps are then flatten and fed to two fully connected layers to be classified into 8 activity classes i.e. walking (0), standing (1), stand-to-sit (2), sitting (3), sit-to-stand (4), sit-to-lie (5), lying face-up (6), and lie-to-sit (7). The convolutional layers and fully connected layers have ELU activation. The results of the classification in the form of recall and measures are given in Fig. 3.

In general, the walking activity signals are well classified by both models achieving recall above 0.90. As for the static activities i.e. standing, sitting and lying face-up, the classifiers managed to correctly classify most of the standing and lying face-up signals. However, the sitting activity is not well classified as indicated by the low recall measure of the classifier. In general, the transitional activity signals are well classified by the classifier. The recognition accuracy of the classifier is 0.856.

It is by no means certain that the presented model is the optimal CNN architecture for activity recognition. More fine-tuning is needed to improve the classification models such as increasing the number of layers. A deeper network might allow better representations to be learned that results in the higher recognition accuracy.

[1] WHO, “WHO | Facts about ageing,” WHO, 30-Sep-2014. [Online]. Available: http://www.who.int/ageing/about/facts/en/.

[2] F. W. Booth, C. K. Roberts, and M. J. Laye, “Lack of exercise is a major cause of chronic diseases,” Compr. Physiol., vol. 2, no. 2, pp. 1143–1211, Apr. 2012.

[3] S. J. Olshansky et al., “The Future of Smart Health,” Computer, vol. 49, no. 11, pp. 14–21, Nov. 2016.

[4] B. Unver, V. Karatosun, and S. Bakirhan, “Ability to rise independently from a chair during 6-month follow-up after unilateral and bilateral total knee replacement,” J. Rehabil. Med., vol. 37, no. 6, pp. 385–387, Nov. 2005.

[5] S. Deandrea, F. Bravi, F. Turati, E. Lucenteforte, C. La Vecchia, and E. Negri, “Risk factors for falls in older people in nursing homes and hospitals. A systematic review and meta-analysis,” Arch. Gerontol. Geriatr., vol. 56, no. 3, pp. 407–415, May 2013.

[6] K. Rapp, C. Becker, I. D. Cameron, H.-H. König, and G. Büchele, “Epidemiology of Falls in Residential Aged Care: Analysis of More Than 70,000 Falls From Residents of Bavarian Nursing Homes,” J. Am. Med. Dir. Assoc., vol. 13, no. 2, p. 187.e1-187.e6, Feb. 2012.

[7] A. Zijlstra, M. Mancini, U. Lindemann, L. Chiari, and W. Zijlstra, “Sit-stand and stand-sit transitions in older adults and patients with Parkinson’s disease: event detection based on motion sensors versus force plates,” J. Neuroengineering Rehabil., vol. 9, p. 75, 2012.

[8] J. D. Schaafsma, Y. Balash, T. Gurevich, A. L. Bartels, J. M. Hausdorff, and N. Giladi, “Characterization of freezing of gait subtypes and the response of each to levodopa in Parkinson’s disease,” Eur. J. Neurol., vol. 10, no. 4, pp. 391–398, Jul. 2003.